Understanding Transformers - Attention Is All You Need explained

1. Introduction

2. Attention

2.1. Scaled Dot-Product Attention

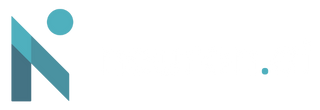

Once we obtain our key, query and value vectors by forwarding our embedded input through three different linear layers, the scaled dot-product attention is computed. As illustrated in Figure 1, the attention weights get calculated by taking the dot-product of the queries and the keys, dividing it by the square root of the dimension of the keys and putting the resulting matrix into the softmax function. Without the scaling, the dot products could grow large in magnitude if we have large values for the dimension of the keys. This would push the softmax function into regions where it has extremely small gradients.

Short explanation: To better understand why the scaling is necessary, take two simple two-by-two matrices with 1s on one diagonal and -1s on the other. These matrices have a mean of 0 but a variance of 1. If we take the dot-product of these two matrices, we get a matrix with 2s on one diagonal and -2 on the other. Therefore, this matrix still has a mean of 0 but a variance of 2. This means that the variance of the dot-product can be very high if we have keys and queries with very large dimensions.

2.2. Multi-Head Attention

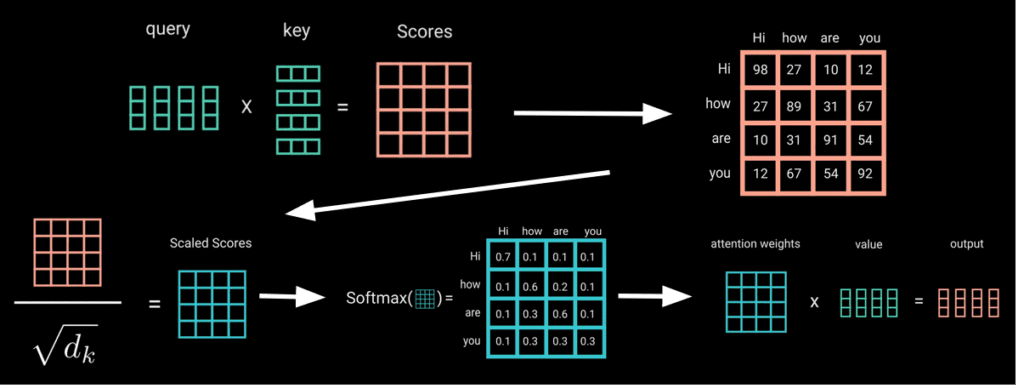

Instead of performing a single attention function with one key, value and query vector, the embedded input tokens are linearly projected h times with different, learned linear projections to obtain h heads each consisting of different value, key and query vectors.

In the paper, the authors used a value of 8 for h. Afterward, as visualized in Figure 3, the attention function is performed on each of these heads in parallel. Finally, the outputs of the heads are concatenated and passed through a linear layer. The idea behind Multi-Head Attention is to have different heads which can interpret completely different relationships between words. Note that after concatenating, the output vector consists of data from h different heads and the linear layer then “mixes” the data of all the heads. In Figure 4 different interpreted relationships of two heads are visualized.

3. Input and Output Embedding

4. Positional Encoding

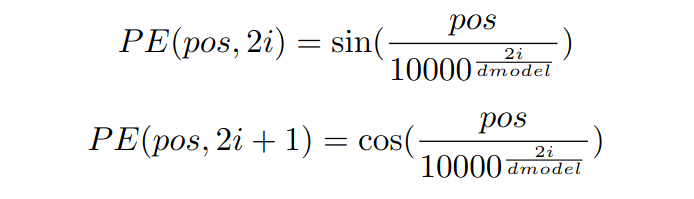

Due to the fact that Transformers do not use recurrence, information about the positions must be added to the embedding. This is done using positional encodings where the sine function is used in even time steps and the cosine function is used in uneven time steps for creating a vector that is added to our input or output embedding vector. The input to Equations 1 and 2 is the position pos and the dimension i as detailed below.

The authors chose these functions because they hypothesized that it would allow the model to easily learn to attend by relative positions, since for any fixed offset k, PE(pos+k) can be represented as a linear function of PE(pos).

5. Structure of a Transformer

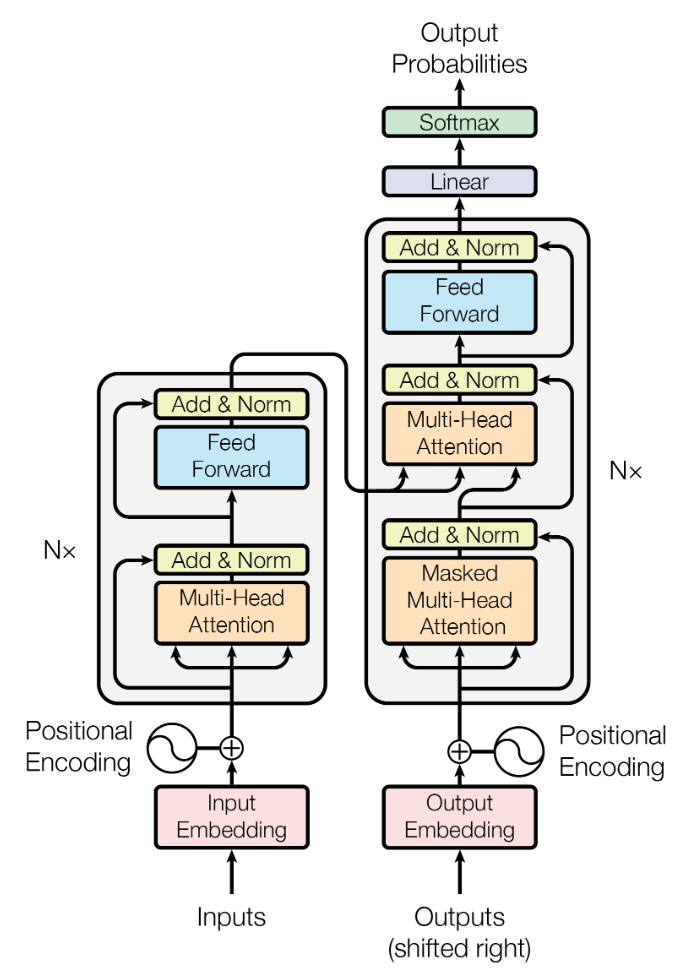

As visualized in Figure 5, a Transformer consists, in addition to input and output embeddings and a positional encoding, of an encoder and decoder. The encoder discovers interesting relationships between the words of the source sentence, whereas the decoder generates the wanted output text sequence.

5.1. Encoder

The encoder is composed of a stack of N=6 identical layers (one after another). Each layer consists of two sub-layers, which are a Multi-Head Attention block and a fully-connected feed-forward network with two linear transformations. Around each sub-layer, a residual connection and a layer normalization are employed. This means that for each sub-layer the input is added to the output of the sub-layer and the result gets normalized. This enhances the backward gradient flow through the different layers of the model. To be able to use residual connections around each sub-layer, the dimension of the outputs of the different sub-layers has to stay the same. The authors chose a dimension of 512.

5.2. Decoder

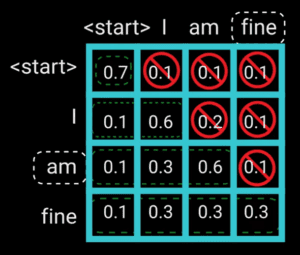

As the name suggests, the Masked Multi-Head Attention block involves a look-ahead mask that prevents the Transformer in the time step t to obtain information about relationships to words after time step t when training. To be more precise, the mask is applied to the target sequences the Transformer gets trained on. Without this mask, information about the sentence, word or label we want to predict would be available during the forward pass while training the Transformer. Figure 6 illustrates which values should be masked by using “I am fine” as an example input. If we want to predict the next word after “am”, we should not have information about the next word(s) which in this case would be “fine”.

This mask consists of zeros in indices where it should not block the information as well as minus infinity in indices where it should block it. To prevent the information we want to block to pass, we add the look-ahead mask directly after scaling the dot product of the queries and keys. After the mask is added, the softmax function of the resulting matrix is taken, which turns the minus infinity values into zeros. This leads to the wanted result, i.e., it ensures that the predictions for time step t can depend only on the known outputs with time step smaller equal than t This process is illustrated in Figure 7.

5.3. Applications of Attention

5.4. Linear Classifier

6. Training

7. Results

8. Conclusion

The success of the attention mechanism and Transformer indicate that it probably will be the foundation for many successful architectures in the future.

Tobias Morocutti

Tobias Morocutti studies Artificial Intelligence at Johannes Kepler University (JKU) in Linz. He currently is in the last semester of his Bachelor programme and works at the Institute of Computational Perception at JKU where he specializes on Audio Processing and Machine Learning. In 2022, before working at the Institute of Computational Perception, he participated at Task 1 (Low-Complexity Acoustic Scene Classification) of the annual DCASE challenge where he ranked third. This year he participates at the same challenge with his work colleagues. In addition to Audio Processing and Machine Learning in general, Tobias is also very interested in Computer Vision.

References

[1] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin, “Attention is all you need”, CoRR, vol. abs/1706.03762, 2017. [Online]. Available: http://arxiv.org/abs/1706.03762

[2] M. Phi, “Illustrated guide to transformers neural network: A step by step explanation”, 2020. [Online]. Available: https://www.youtube.com/watch?v=4Bdc55j80l8

[3] A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani,M. Minderer, G. Heigold, S. Gelly, J. Uszkoreit, and N. Houlsby, “An image is worth 16×16 words: Transformers for image recognition at scale”, 2021.

[4] D. Bahdanau, K. Cho, and Y. Bengio, “Neural Machine Translation by Jointly Learning to Align and Translate”, 2016