Gender Bias in Recommender Systems:

Why Is It Important, Where Does It Come From, and How Can It Be Mitigated?

The goal of this blog post is to discuss the problem of bias in recommender systems, focusing on gender bias, i.e., male users receive better recommendations than others or why products made by individuals not identifying with a certain gender are less likely to be recommended. We will describe how bias occurs, and finally, review the methods for mitigating bias.

1. Recommender systems

The amount of information available today far exceeds our information needs and processing capacity. Recommender systems are therefore often used in daily life as a tool to reduce the information overload, i.e., they help us find items we need or like and point out items we probably would have missed otherwise. A recommender system is an information filtering system that uses large amounts of data to suggest the most relevant items to the user from a long list of possible choices. The items can range from books or articles to read to which video or music track to play next.

As users, we want the recommendations to be relevant to us. For example, we want the recommender system to suggest items that we actually like. For businesses, it is important that the recommendations are good so that users stay on their site longer, buy more products or click more often on the suggested items. Remember all the targeted ads you see when scrolling on social media, suggestions on what video or movie to play next on streaming services, or “other users have also bought” recommendations when shopping online.

1.1 Recommendations generated by recommender systems

As recommender systems play an increasingly important role in our everyday lives, it is important to take into consideration not only that they should provide good recommendations but also fair and responsible ones. The importance of having a fair recommendation system can be viewed from different perspectives. First, from an ethical perspective, fairness is an important virtue and a fundamental requirement for a just society. Second, there is also a legal perspective, as anti-discrimination laws prohibit discrimination against different groups of people based on gender, age, ethnicity, etc. Finally, from the user’s perspective, a fair recommender system helps the user get relevant but diverse information in the recommendations, including some niche information, without violating their trust in the recommender system [5].

2. Bias

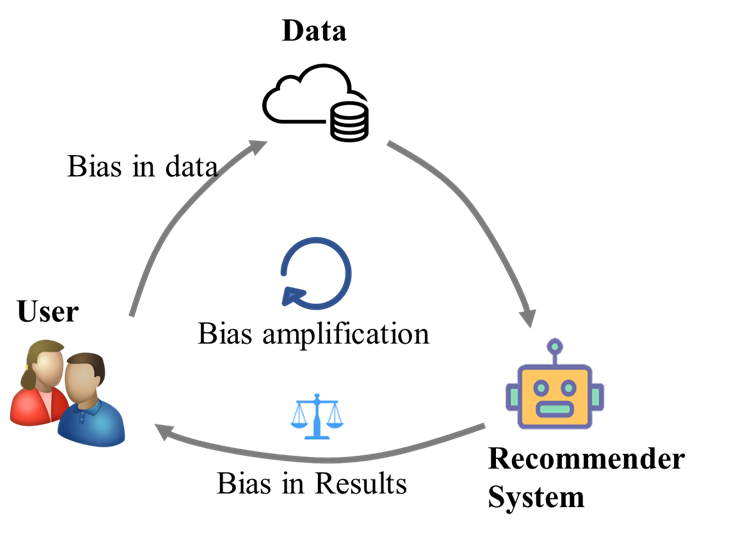

Bias is a concept closely related to fairness. In the context of recommender systems, we can think of bias as a disproportionate weight in favor of or against an item or group of people, usually in an unfair way. Of course, everyone has preferences for certain things over others, so we are all biased in some way, and our biases are reflected in our tastes. We expect recommender systems to give us suggestions that match our preferences, including our individual bias. However, it is unacceptable if the personal biases collected from the interactions of a group of users with the system manifest in the outputs of the recommender system for new users. The algorithms doing so reinforce the biases throughout the system because new users start to interact with already biased recommendations, and these interactions are then used as input for future recommendations.

3. Where do biases come from?

Recommender systems are affected by various biases which originate from unbalanced datasets, algorithms, content presentation (e.g., the position of items on a website), and also user cognition and perception.

3.1 Unbalanced datasets

One source of bias in datasets is the “gender digital divide”, which is the unequal access to and use of information and communication technology (ICT) between the genders [7]. Because fewer women worldwide own a smartphone and fewer women access the Internet through a cell phone [8], data collected by smartphone- and Internet-based services are inherently biased. Another source of bias in the acquired data, aside from sample size disparity, may be that the selected features may be less informative or less reliably collected for minority group(s).

3.2 Algorithmic bias

When a model captures the interaction patterns of the majority group more strongly, it results in better model performance for the majority group compared to that for the minority group, which is called model or algorithmic bias. Unbalanced training data is a major cause of such model bias. However, different models produce results with varying degrees of unfairness when trained on the same data, and some models even amplify the bias in the data, meaning that the model’s results are even more unfair compared to existing discrepancies in the underlying data.

3.3 Development of recommender systems

In developing recommender systems, many decisions must be made, all of which can lead to bias. People must decide what data to collect and how to collect it, and then label the data as well. Most demographic data is labeled based on simplified, binary female-male categories, which negatively impacts non-cisgender people. Recommender system developers first need to decide which datasets and variables will be included in the development of the recommender system. Then, they need to select which model they are going to use and set the rules that algorithms must follow to make predictions. Finally, the developers need to decide how they will evaluate how good the generated recommendations are. The gender structure in the field of artificial intelligence and data science is unequal and dominated by men, which affects the decisions made by humans in the development of recommender systems at each of the previously mentioned stages [9].

4. Debiasing

The scientific community is becoming increasingly aware of the problem of bias in recommender systems, and consequently, efforts to mitigate it are increasing. Most of them focus on the algorithmic aspect, i.e., developing new metrics to quantify fairness and new machine learning techniques that remove biases from models.

Fairness metrics are a set of measures that allow for the detection of bias in data or models. Most fairness metrics are proposed for outcome fairness, i.e., fair distribution of outputs of the recommender system. There are several types of metrics that are used and can be categorized into three groups: statistical metrics, individual fairness metrics, and causality-based fairness notions. Statistical fairness metrics assess differences in one or more metrics between groups in a given set of predictions of the model. The underlying concept of individual fairness is that similar users are treated similarly, regardless of sensitive attributes such as gender. Using causality-based fairness notions, the dependence between the sensitive attribute(s) and the final decision for each cause of unfairness can be analyzed. Thus, unlike statistical measures that rely only on correlation, they also provide causal reasons for unfairness [5].

The scientific community is becoming increasingly aware of the problem of bias in recommender systems, and consequently, efforts to mitigate it are increasing. Most of them focus on the algorithmic aspect, i.e., developing new metrics to quantify fairness and new machine learning techniques that remove biases from models.

Fairness metrics are a set of measures that allow for the detection of bias in data or models. Most fairness metrics are proposed for outcome fairness, i.e., fair distribution of outputs of the recommender system. There are several types of metrics that are used and can be categorized into three groups: statistical metrics, individual fairness metrics, and causality-based fairness notions. Statistical fairness metrics assess differences in one or more metrics between groups in a given set of predictions of the model. The underlying concept of individual fairness is that similar users are treated similarly, regardless of sensitive attributes such as gender. Using causality-based fairness notions, the dependence between the sensitive attribute(s) and the final decision for each cause of unfairness can be analyzed. Thus, unlike statistical measures that rely only on correlation, they also provide causal reasons for unfairness [5].

The common debiasing algorithms for recommender systems can be divided into four main approaches: Rebalancing, Regularization, Counterfactual Fairness, and Adversarial Training [10]. They can be implemented in different phases: Pre-processing, In-processing and Post-processing.

4.1 Rebalancing

Rebalancing methods adjust the input data or the output recommendation results to satisfy the fairness measure (e.g., that demographic groups are equally represented). Common rebalancing approaches in the pre-processing phase include relabeling or resampling of training data to achieve an equal number of labels and/or samples in all groups. Rebalancing methods in the post-processing phase typically modify the recommender system result list to meet the targeted fairness measure(s) [11].

4.2 Regularization

Regularization methods achieve debiasing by formulating the fairness criteria as a regularizer that is minimized when the distribution of recommendations is fair [12].

4.3 Counterfactual fairness

In the case of counterfactual fairness, a decision is recognized as fair to an individual if they would receive the same decision in the real world as well as in a counterfactual world where the individual belongs to a different demographic group. This approach allows algorithms to take into account the various social biases that may arise against individuals based on ethically sensitive attributes (e.g., gender, ethnicity) and to effectively compensate for these biases rather than simply ignoring these sensitive attributes [13].

4.4 Adversarial training

The challenge of generating relevant recommendations for the user without leaking the user’s private attribute information (e.g., gender) into the system can be formulated as an adversarial training problem. Modeled as a min-max game between recommender and attacker components, the ultimate goal of the recommender system is to generate relevant recommendation lists for users such that a potential adversary cannot infer their private attribute information from the lists [14].

4.5 Conclusions

However, to comprehensively address the problem of unfairness, it must be viewed in a broader context as a social problem that requires solutions beyond the development of fairness metrics and new algorithmic interventions. As discussed previously, many decisions which can introduce bias have to be made during development of a recommender system, and diversifying the teams that develop and manage artificial intelligence systems may reduce bias in these systems. A recent study showed that diverse demographic groups are better at reducing algorithmic biases [15]. Therefore, it is important to promote (gender) diversity, equality, and inclusion in the field of artificial intelligence. It is also important to give voice to minorities and marginalized members of the community who are underrepresented in datasets. Last but not least, codes, policies, and laws for responsible artificial intelligence must include equity and equality with respect to gender, minorities, and other marginalized identities.

Angelika Vižintin

Angelika Vižintin received her PhD in Biosciences at the University of Ljubljana, Slovenia. In her PhD thesis, she studied the effects of short high-voltage electric pulses on animal cell lines and their potential application, for example, for electrochemotherapy, a local cancer treatment that combines membrane-permeabilizing electric pulses and chemotherapeutic drugs. She is currently taking courses in machine learning, mathematical foundations of artificial intelligence, computer science, etc. in the Artificial Intelligence programme at Johannes Kepler University in Linz, Austria, with the goal of learning how to use artificial intelligence tools in biological/biomedical research. She also produces science-related programs for the Slovenian Radio Študent, one of the oldest and strongest independent radio stations in Europe. Among the topics she reports on most frequently are the biology of the female body and women’s diseases, biotechnology, genetically modified organisms, molecular biology, and artificial intelligence in the life sciences. In her free time, she enjoys listening to music and traveling.

References

[1] M. D. Ekstrand, M. Tian, I. M. Azpiazu, J. D. Ekstrand, O. Anuyah, D. McNeill, and M. S. Pera, “All the cool kids, how do they fit in?: Popularity and demographic biases in recommender evaluation and effectiveness,” 21-Jan-2018. [Online]. Available: https://proceedings.mlr.press/v81/ekstrand18b.html.

[2] M. D. Ekstrand and D. Kluver, “Exploring Author Gender in Book Rating and Recommendation.” arXiv, 2018. doi: 10.48550/ARXIV.1808.07586.

[3] A. Lambrecht and C. Tucker, “Algorithmic Bias? An Empirical Study of Apparent Gender-Based Discrimination in the Display of STEM Career Ads,” Management Science, vol. 65, no. 7. Institute for Operations Research and the Management Sciences (INFORMS), pp. 2966–2981, Jul. 2019. doi: 10.1287/mnsc.2018.3093.

[4] J. Dastin, “Amazon Scraps Secret AI Recruiting Tool that Showed Bias against Women,” in Ethics of data and analytics: concepts and cases, 1st ed., K. Martin, Ed. New York: Auerbach Publications, 2022.

[5] Wang, W. Ma, M. Zhang, Y. Liu, and S. Ma, “A Survey on the Fairness of Recommender Systems,” ACM Transactions on Information Systems, vol. 41, no. 3. Association for Computing Machinery (ACM), pp. 1–43, Feb. 07, 2023. doi: 10.1145/3547333.

[6] “Bias Issues and Solutions in Recommender System.” [Online]. Available: https://lds4bias.github.io/.

[7] A. Acilar and Ø. Sæbø, “Towards understanding the gender digital divide: a systematic literature review,” Global Knowledge, Memory and Communication, vol. 72, no. 3. Emerald, pp. 233–249, Dec. 16, 2021. doi: 10.1108/gkmc-09-2021-0147.

[8] GSMA, “The Mobile Gender Gap Report 2022.” [Online]. Available: https://www.gsma.com/r/wp-content/uploads/2022/06/The-Mobile-Gender-Gap-Report-2022.pdf.

[9] A. Nadeem, B. Abedin, and O. Marjanovic, “Gender bias in AI: A review of contributing factors and mitigating strategies,” ACIS 2020 Proceedings, vol. 27. Available: https://aisel.aisnet.org/acis2020/27.

[10] J. Chen, H. Dong, X. Wang, F. Feng, M. Wang, and X. He, “Bias and Debias in Recommender System: A Survey and Future Directions.” arXiv, 2020. doi: 10.48550/ARXIV.2010.03240.

[12] A. B. Melchiorre, N. Rekabsaz, E. Parada-Cabaleiro, S. Brandl, O. Lesota, and M. Schedl, “Investigating gender fairness of recommendation algorithms in the music domain,” Information Processing & Management, vol. 58, no. 5. Elsevier BV, p. 102666, Sep. 2021. doi: 10.1016/j.ipm.2021.102666.

[12] H. Abdollahpouri, R. Burke, and B. Mobasher, “Controlling Popularity Bias in Learning-to-Rank Recommendation,” Proceedings of the Eleventh ACM Conference on Recommender Systems. ACM, Aug. 27, 2017. doi: 10.1145/3109859.3109912.

[13] M. J. Kusner, J. R. Loftus, C. Russell, and R. Silva, “Counterfactual Fairness.” arXiv, 2017. doi: 10.48550/ARXIV.1703.06856.

[14] G. Beigi, A. Mosallanezhad, R. Guo, H. Alvari, A. Nou, and H. Liu, “Privacy-Aware Recommendation with Private-Attribute Protection using Adversarial Learning,” Proceedings of the 13th International Conference on Web Search and Data Mining. ACM, Jan. 20, 2020. doi: 10.1145/3336191.3371832.

[15] B. Cowgill, F. Dell’Acqua, S. Deng, D. Hsu, N. Verma, and A. Chaintreau, “Biased Programmers? Or Biased Data? A Field Experiment in Operationalizing AI Ethics,” SSRN Electronic Journal. Elsevier BV, 2020. doi: 10.2139/ssrn.3615404.